Critical Capabilities For Edge Computing In Industrial IoT Scenarios

Every day, edge computing is emerging from different sectors: from building elevators that know to stop and lock their doors open if there’s an earthquake, to water valves that can shut down if there’s a leak somewhere. But how can an enterprise select the most effective edge computing capability for itself in enterprises for IIoT use cases? The following article explains the different capabilities of edge computing that can help enterprises to decide

Edge computing is one of the core components of the Industrial Internet of Things (IIoT). It plays a critical role in accelerating the journey towards Industry 4.0. Large enterprises from manufacturing, transportation, oil and gas, and smart buildings are relying on edge computing platforms to implement the next generation of automation.

What is Edge?

Edge computing in the context of IIoT brings compute closer to the origin of data. Without the edge computing layer, the data acquired from assets and sensors connected to the machines and devices will be sent to a remote data center or the public/private cloud. This may result in increased latency, poor data locality, and increased bandwidth costs.

Edge computing is an intermediary between the devices and the cloud or data center. It applies business logic to the data ingested by devices while providing analytics in real-time. It acts as a conduit between the origin of the data and the cloud, which dramatically reduces the latency that may occur due to the round trip to the cloud. Since the edge can process and filter the data that needs to be sent to the cloud, it also reduces the bandwidth cost. Finally, edge computing will help organizations with data locality and sovereignty through local processing and storage.

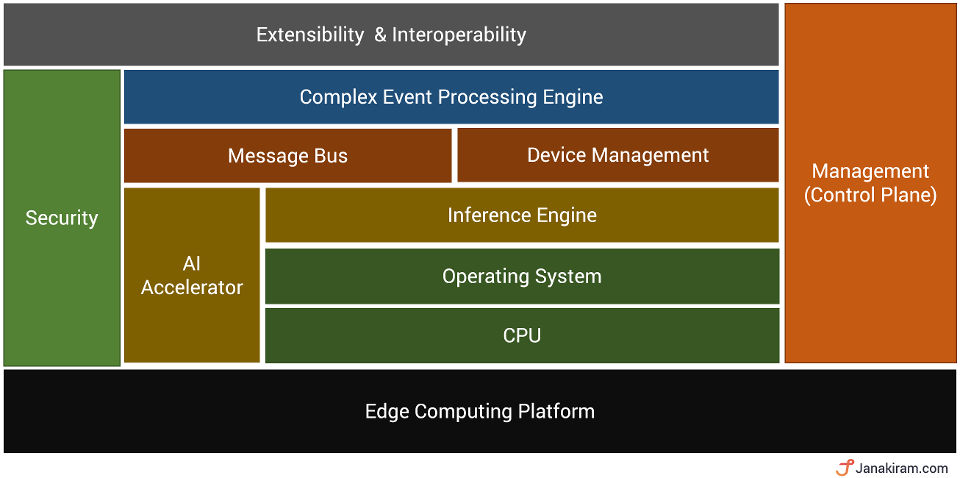

Building Blocks of the Edge

Customers considering to deploy an edge computing platform must evaluate if the platform supports the essential capabilities to deliver the benefits promised by the edge.

Processor and OS

Edge computing typically runs on resource-constrained devices. Compared to desktops and servers, edge computing devices usually have less horsepower. They have a smaller form factor making it possible to deploy them in space-constrained environments. Since most of the System on Chip (SoC) devices run on an ARM processor, edge computing supports both x86 and ARM architectures.

Modern mobile phones are increasingly becoming powerful, which can act as edge computing devices. Ruggedized, purpose-built mobile phones based on Android can run the edge software stack.

Edge computing software should be available for Microsoft Windows, Linux, and Android, making it easy for customers to target existing environments running mainstream OS.

AI Accelerator & Inferencing Engine

The edge is becoming the destination for running machine learning and deep learning in an offline mode. When compared to an enterprise data center or the public cloud infrastructure, edge computing has limited resources and computing power. When deep learning models are deployed at the edge, they don’t get the same horsepower as the public cloud, which may slow down inferencing, a process where trained models are used for classification and prediction.

To bridge the gap between the data center and edge, chip manufacturers are building a niche, purpose-built accelerator that significantly speeds up model inferencing. These modern processors assist the CPU of the edge devices by taking over the complex mathematical calculations needed for running deep learning models. While these chips are not comparable to their counterparts – GPUs – running in the cloud, they do accelerate the inferencing process. This results in faster prediction, detection, and classification of data ingested to the edge layer.

Some of the popular AI accelerators include the NVIDIA Jetson family of GPUs, Intel Movidius and Myriad VPUs, Google Edge TPUs, and Qualcomm Hexagon. These chips come with drivers and toolkits to optimize deep learning models for targeting the AI accelerator. For example, NVIDIA expects the models to be in TensorRT format. Intel ships OpenVINO Toolkit for model optimization. Google needs the models in TensorFlow Lite format, and Qualcomm wants the models to get converted into Deep Learning Container (DLC) format through its Neural Processing SDK.

An edge computing platform should support mainstream AI accelerators and the optimizations needed for each of the chips.

When an AI accelerator is missing, but inferencing is needed at the edge, the platform should support software-based AI acceleration through advanced model optimization techniques such as quantization.

Message Bus

A message bus acts as the conduit to access the data points and events ingested into the system. The message bus is integrated with message brokers such as MQTT, OPC-UA, message-oriented middleware, and cloud-based messaging services.

The message bus acts as a single source of truth for all the components of the edge computing platforms. Telemetry data enters the message bus through the message broker, which is processed by the CEP. The output from CEP, machine learning inferencing, and other data processing elements is sent to the same message bus.

The message bus acts as a pipeline with multiple stages where each stage is responsible for reading, processing, and updating the data. Each component is typically associated with a topic to isolate and partition the data.

Device Management

Since the edge acts as the gateway to multiple devices, it has to track, monitor, and manage connected device fleets. Through the edge, we need to ensure that the IoT devices work properly and securely after they have been configured and deployed. The edge has to provide secure access to the connected devices, monitor health, detect and remotely troubleshoot problems, and even manage software and firmware updates.

The edge will maintain a registry of devices to manage the lifecycle. From onboarding to decommissioning, each device will be managed via edge computing’s device management service.

If the customer has existing cloud-based IoT deployments, the device management component of the edge has to integrate with it seamlessly.

Complex Event Processing (CEP) Engine

A Complex Event Processing (CEP) engine is responsible for aggregating, processing and transforming streams or events coming via the message bus in real-time. In the context of IIoT and edge, the CEP engine aggregates telemetry data ingested by a variety of sensors connected to the devices. It then normalizes and transforms the streaming data for advanced queries and analytics.

The CEP engine has visibility into every data point ingested into the edge computing layer. Apart from aggregation and processing, it can even invoke custom functions or perform inferencing based on the registered machine learning models.

Complex Event Processing is mainly used to address low latency requirements. It is usually expected to respond to events within a few milliseconds from the time that an event arrives.

Secured Access

An edge computing layer should integrate with existing LDAP and IAM systems to provide role based access control (RBAC). Each IT/OT persona should be associated with a well-defined role designated to perform a specific action. For example, an application developer role shouldn’t have permission to perform firmware upgrades.

Extensibility

For the devices deployed in an IIoT environment, an edge computing platform is a gateway and hub that needs to be integrated with different resources from the data center and public cloud. From material resource planning software to the corporate directory services to a message broker to the data lake, an edge computing platform has to integrate with a diverse set of services and applications.

Interoperability and extensibility become the key for enterprises to realize the return of investment from edge computing. An edge computing platform should be programmable to allow developers to build highly customized solutions.

Management

A typical edge computing environment may have millions of sensors and actuators connected to tens of thousands of edge devices. Managing the configuration, security, applications, machine learning models, and CEP queries can become extremely challenging.

A scalable edge computing environment demands a robust, reliable management platform that acts as the single pane of glass to control the edge devices. It becomes the unified control plane to onboard users, register edge devices, integrate cloud services and existing message brokers. It also provides a friendly user interface to manage the workflow and tasks involved in managing the environment.

The above capabilities help enterprises choose the right edge computing platform for IIoT use cases.

This article was written by Janakiram Msv from Forbes and was legally licensed through the NewsCred publisher network. Please direct all licensing questions to legal@newscred.com.

![]()